Insights from a COMtalks Panel on Integrating AI with Journalism

Picture a newsroom where AI drafts stories in seconds, transcribes interviews instantly and optimizes headlines for maximum engagement. Now, imagine the risks: misinformation, job displacement, and ethical dilemmas. As AI continues to reshape industries, journalism finds itself at a thrilling and uncertain crossroads.

On Feb. 13, Boston University’s College of Communication hosted a virtual discussion as part of the COMtalks series, tackling a critical question: Is AI a boon or a bane for journalism?

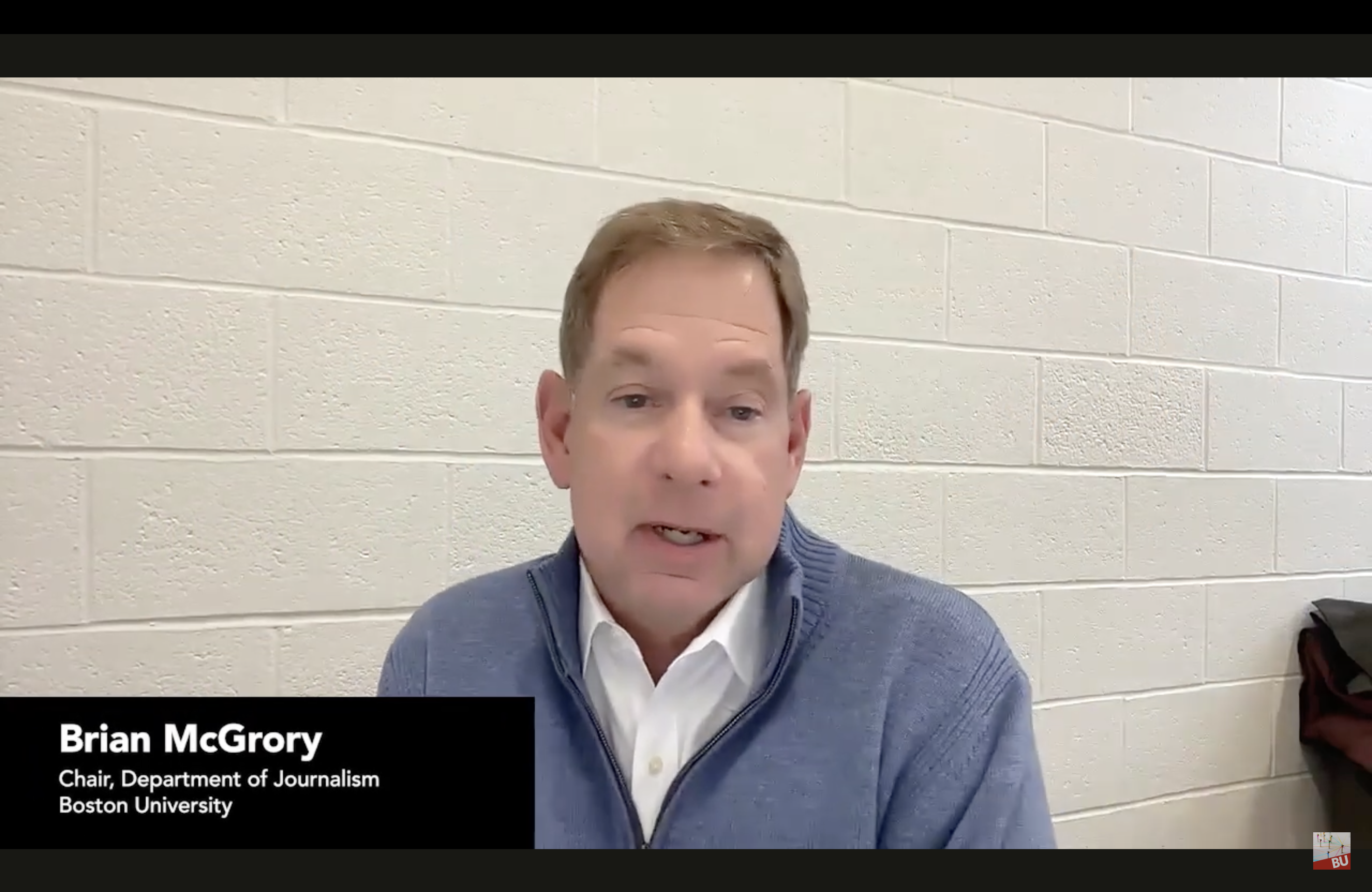

Brian McGrory, chair of Boston University’s journalism department, moderated the panel, which featured Michelle Johnson, professor emerita at Boston University and a former editor at The Boston Globe; Alfred Hermida, a professor at the University of British Columbia and co-founder of The Conversation Canada; and Mikey Centrella, director of product at PBS Digital Innovation. Together, they explored AI’s impact on newsrooms, balancing its vast potential with ethical and economic concerns.

AI: A Tool, Not a Threat

As the panel kicked off, the speakers quickly found common ground: AI isn’t journalism’s enemy—it’s a tool that, like past technological shifts, will become a natural part of newsroom workflows.

“What we’re seeing right now is very similar to what happened back then,” Johnson said, referencing past technological advances. “These tools will just become part of people’s daily jobs.”

Hermida provided historical context, noting that journalism has always adapted to technological disruptions.

“When radio came along, newspapers feared losing their audience,” he said. “Then television came, then the internet. We’ve been here before.”

Centrella pointed out that AI is already embedded in newsroom operations, from transcription services to predictive analytics.

“Media companies and publishers have already been using AI for years, automation, prediction tools, sentiment analysis, and transcribing interviews,” he said. “The shift we’re seeing now is in generative AI, which brings new challenges.”

While AI can improve newsroom efficiency, the panelists agreed that its role should be to enhance, not replace human journalists. Johnson emphasized that journalists must figure out how to integrate AI effectively.

“It cannot be some bot running the show,” she said. “There’s always going to be a human component involved in this.”

Ethics, Accuracy, and the Human Touch

While AI can increase efficiency, concerns over accuracy and ethics remain pressing. AI-generated content, if left unchecked, can introduce factual errors, biases, and even plagiarism. The panelists agreed that transparency is key to audience trust.

Centrella shared an example from PBS’s American Historia series, where AI was used to recreate historical scenes. The network ensured transparency by clearly labeling AI-generated elements on-screen and online.

“We asked them to disclose that both at the start of the production with a lower third on the screen and on the website,” he said.

Johnson highlighted that while AI can assist in content production, it cannot replace journalistic judgment.

“We need to recognize that AI is just a tool, it’s up to journalists to fact-check, verify, and apply ethical standards,” she said.

Hermida added that AI-generated summaries of government meetings could be beneficial for newsrooms with limited staff, but only if audiences are informed of their use.

“Think of AI as an intern,” he said. “It can assist, generate ideas, and speed up processes, but it still needs oversight. You wouldn’t publish an intern’s work without checking it, and AI should be treated the same way.”

Preparing Tomorrow’s Journalists: AI in the Classroom

Beyond professional newsrooms, journalism education must also evolve to equip future reporters with AI literacy. The panelists emphasized that AI literacy is just as important as any other journalistic skill, with a focus on responsible use rather than technical expertise.

Johnson said journalism education must adapt, encouraging students to critically engage with AI rather than ignore or fear it.

“Instead of banning AI, we should be helping students analyze its role in reporting,” she said.

She suggested using AI-generated content as a teaching tool, where students could fact-check and refine AI-generated drafts to meet professional standards.

Hermida described an assignment he introduced in his research class, where instead of having students write a research paper, he asked them to use ChatGPT to generate one. Then, students took on the role of instructors, grading the AI-generated papers. The exercise, he said, helped students recognize AI’s limitations, such as its tendency to fabricate citations and introduce inaccuracies, reinforcing the importance of critical evaluation.

Centrella noted that students are already using AI, whether educators acknowledge it or not.

“The challenge isn’t stopping them, but teaching them how to use it responsibly,” he said.

Instead of discouraging AI use, he advocated for structured guidance, ensuring students understand when AI is a helpful tool and when it might compromise journalistic integrity.

The Pace and Impact of AI in Journalism

The panelists engaged in a heated discussion about the pace of AI development and its long-term implications for journalism. While Centrella called current AI models “B-minus work” and emphasized their probabilistic nature, Johnson disagreed.

“I think it’s actually more advanced than that,” Johnson said. “This is going to happen faster than we think. There are emerging models that are self-learning, which means they won’t just be predictive—they will actively improve without human input.”

Hermida said AI will inevitably become a fundamental part of journalism, much like social media has. However, he stressed that the industry must ensure its implementation prioritizes journalistic integrity over corporate interests.

“The question isn’t whether AI will change journalism, it’s whether journalists will take the lead in defining how AI is used,” he said.

The panelists debated whether AI’s evolution would be gradual or a rapid transformation. While Centrella suggested that AI will continue improving, he cautioned against assuming it could replace human creativity and judgment.

The Road Ahead

AI’s presence in newsrooms is inevitable, but the industry’s response will determine whether it strengthens or weakens the integrity of journalism. Striking a balance between automation and editorial judgment will be crucial. While AI can assist with tasks such as data analysis and content personalization, it cannot replace the fundamental principles of investigative reporting, verification, and storytelling.

As AI becomes more sophisticated, its adoption in journalism must be guided by responsibility and foresight. The technology should complement journalistic work, not substitute it. But beyond the immediate concerns of accuracy and accountability, broader questions remain: How will AI shape the way stories are told? Will it elevate journalism’s role in society or dilute its impact?

AI is here to stay, but in the newsroom—friend, foe, or just a tool? Its future in journalism depends on how we choose to wield it.

Leave a Reply to Frank Cancel reply